Three reviews of the three top Jira Test Management tool “add in" applications. We've picked the Jira Test Case Management tools that have the biggest install bases (taken from the Atlassian Marketplace and the number of installs Atlassian publish for these tools). We're taking each of these three top tools in turn and providing you with a detailed deep dive review written by our specialist in the Test Management domain. At the time of writing this includes:

| Zephyr for Jira (jump to the review) | 17.7k installs | |

| XRay (jump to the review) | 12k installs | |

| Zephyr Scale [TM4J] (jump to the review) | 6.4k installs | |

| QMetry (review coming soon) | 1.2k installs |

You will find no 'star' reviews here. You can NOT base the implementation and roll out of a test management tool on a simple star rating. The aim is to review the tools and give you an understanding of how they will meet your requirements. You do know what your requirements are don't you? If you're not clear on what your requirements are yet then take a look at our How to Evaluate Test Management Tools document.

We've gone through each tool and in the reviews below we call out the key aspects and findings for each tool. The importance of these aspects and findings will be specific to your implementation. It will require some work on your part to asses these tools and work out which one is the best fit for your organisation. If you need some help with that evaluation we'd be happy to help. We can help with a free 30 minute review session.

Looking to migrate from a legacy test management tool like HP ALM?

Talk to us – we can simplify the migration for you.

All of the tools reviewed conform to the usual testing terminology. You'll find the concepts of Cases, Libraries, Scripts, Cycles, Plans, Runs, Results and Environments all used consistently across all the tools. Where there are differences we'll call them out.

One of the most important things you need to consider when deciding on the right tool is the entity relationship model implemented by the tool. That is, the relationships modelled between the entities like Epics, Stories, Plans, Cycles, etc. Whilst these relationships are all quite similar between the tools there are distinct differences. These differences can have a big impact if they doesn't fit the way in which you work. We outline each entity model as we review each tool and call out.

Let's get started then. First up is Zephyr Scale (ZSC), followed by XRay and then finally Zephyr for Jira (ZSC).

Zephyr Scale Cloud (ZSC), formerly TM4J Cloud, is pitched as “a full-featured test management solution that seamlessly integrates with Jira". Well all the solutions out there say pretty much exactly the same. So, let's dig deeper and find out what 'full-featured' means.

ZSC implements the standard set of components you'd expect. Test Plan, Cycle, Case and Result. It doesn't try to give them different stand out names for the sake of differentiation – like some tools. This keeps things simple from the start and makes it easier to get started.

With this structure ZSC allows you to work in several different ways. They define these ways as 'Workflow Strategies'. Or if you want to be less highfalutin then think of it as 'Usage Scenarios'. The idea is that you can configure ZSC to fit different methodologies. Either Agile or Waterfall methodologies. You tweak the setup to fit your particular implementation of those methodologies.

In practice this means you're working in one of three ways. Either with Test Cases linked to stories (no Test Plans or Test Cycles). Or you're working with Test Cycles (but no Test Plans). Or you're working with Test Plans that then contain 1 or more Test Cycles. If you're working just with Test Cycles the idea is that you're working in a more Agile way. You're just linking test cycles directly to a User Story or Jira issue. Zephyr Scale Workflow Strategies

In practice it means you have 1, 2 or 3 hierarchy levels with cases, cycles and/or plans. Anyway, I digress. Let's start at the bottom and work our way up.

Everything in ZSC starts with Tests. You build out your test cases in isolation within the 'Tests' area in ZSC. This meets the usual model where we have a library of tests. Although it's not specifically called a test library. Of course you have the usual set of features that allow you to group your tests by folders and/or labels. Always useful!

You can create your Test cases individually, in bulk or import them (from CSV, Excel or other ZSC instances). Test cases that you create are of one of 3 types:

You can define each test case in all formats at the same time. You then flip between the different formats without losing anything. When you come to execute the tests the mode/type you have selected is the one you'll execute with. You can't flip between the different types at execution time (not sure you'd even want to). It's a neat touch, flipping between the types without losing data in the other types. Means you could start out with a 'Step-by-Step' approach and then migrate to 'BDD – Gherkin'.

As part of your test development you have the all important “Call to test" feature. When you define a step you can define it as a call to another test case. The case you're writing then has all the test steps from the 'called test case' embedded in it. If you update the called test case then those updates get reflected in calling test case. Works as you'd expect it to.

Custom fields can be defined at both the test case and test step level. You can also create custom fields for cycles, plans and executions; but we'll come on to that later. All the usual field types are supported (e.g. checkbox, date, number, select list, etc). Supporting Multi Choice select lists is an unexpected find – very useful. Note that you have to specify Custom fields for each project. Maybe you have a standard set of custom fields you need to apply across all projects? If that's the case then you'll need to apply them every time, when you setup each project. This can lead to inconsistencies across projects – manageable but a bit of a pain. That's me being quite critical though. ZSC's custom fields is well thought through and executed.

ZSC also has a concept of Data sets. You can create 'data sets' as separate entities and then link them to a 'Step-by-step' type of test case. This is a clever feature that allows you to define customized sets of test data outside of the test case.

For example you define the data set meta data as a project 'data set' and reuse this across different test cases. Then within each test case you select that 'data set' (e.g. the meta data definition). Then you enter the test data specifically for that test case. Once defined it's easy enough to reference the data using {} to select a particular column. A clever way to capture and use your test data.

Parameters is another test case feature (by the way – it's either Data Sets OR Parameters – you can't have both). You define the default parameters for a test case and use those parameters in the test steps. Again it's the {param_name} nomenclature used in the test steps. You don't want to go changing the name of a parameter after you've included it in the test step though. It won't automatically update in the test steps. A small point but worth remembering. Get the naming of your parameters right at the start!

On the Traceability side of things, when designing your test cases you can add new Jira issue types (e.g. User Story). Or when creating your User Stories, link and add tests. It's simple either way round.

Test cases can also be linked to other issues in different projects. This could work well when you need a dedicated Jira project for the test cases. Perhaps a set of test cases (e.g. security tests) that you need to run against many different projects. An advanced feature only usually available in high end test case management tools. A pleasant surprise to find in a Jira add-on in this domain. A great feature to have.

Under the traceability you get visibility of the link issues status. So it's easy to see if your tests are linked to issues that are on a backlog or in a particular sprint.

Whilst it's possible to add attachments to test cases there is one slight limitation. That is they can't be added to specific test steps. You either add an attachment at the test case level or you don't add it at all. You can get round this. Embed images and links in the 'test data' HTML editor but that's a pretty clumsy approach. Worth mentioning too that attachments you add can't be viewed from within Jira. So if you add a .png file in your test case you have to download it from Jira before you can view it. That's a pain! Whilst attachments are supported I'd say this could do with a few enhancements.

Test cases can be versioned. Another advanced feature that'll be important for many teams. You control when and where you increment a version. It's not automatically managed for you. So you can make lots of changes to a test case without incrementing the version. It's not enforced version control. Having said that there's a very detailed list of changes to each version. All the changes tracked under the test cases history. There's a good balance here. A balance between the way version control and the history work together. Be warry though. If your organisation demands enforced version control, ZSC won't give you that. Don't let that put you off though. What's delivered here is a very capable feature that will work for pretty much all teams.

When you do create a new version it's still the same Jira issue, you see the issue id go from say DEMO-T1 (1.0) to DEMO-T1 (2.0). It would appear that you can't increment by decimals (e.g. 2.0 to 2.1) though. I never managed to work out how to do this – it wasn't mentioned in the user guide. Be aware too that you can only create a new version from the latest version (not previous versions) of a test case. That's no real hardship though. Under the history tab for the test case you can also compare the changes across versions. Version control, history tracking and test case comparison make up a powerful feature set.

Another great feature is the bulk insert of test cases. Sometimes when you're outlining your tests you want to put in a load of test case place holders. A list of test case titles where you then go back and fill out the detail later. ZSC makes this easy. Open up the bulk create option and add in a list of test case titles. Then create all those test cases in one go. They can all be created with a particular status, priority, component and owner. You can also add labels at this stage too. This makes it easy to build out that initial structure of test cases.

So lots of well implemented features at the test case level. How does this scale when it comes to grouping and executing tests? We look at that next.

In ZSC think of a test cycle as a collection of test cases that are related. For example a collection of test cases that cover a specific functional area. Adding tests to a cycle makes it easier to assign to a user, assignto an environment and link to a test plan. Cycles also make it easier to report on specific aspects of your testing.

Test cycles don't have to be run under a test plan (see more below). Each cycle can live as an entity in it's own right. With the cases in that cycle being run independently as an individual execution. So you may have TestCaseA and TestCaseB in a cycle. Then you have TestCaseA that's been run once but TestCaseB has been run 3 times. You have a set of tests in a cycle that can be run against different versions of the application under test. The overall Cycle status aggregates the latest result from each test case. Note that the previous runs of a particular test case are locked off. Once you create the next execution instance the previous run is locked off. That's a bit of a pain if you want to update the Version or Environment it was executed against.

What is useful in the Cycle is the ability to track time and raise defects directly during execution. From the Cycle main page you then get clear visibility of execution status. The number of issues raised, the number of tests in the cycle and status of those tests. There are some clever touches in ZSC like the ability to 'Set Status of All below'. Perhaps you've completed some tests and want to update the rest to 'Blocked'. That's two clicks – no tedious search and update required.

From a Jira issue, like a story, there's also the ability to create a new test Cycle or link to an existing test cycle. This makes it easy to link one or more cycle to provide test coverage of say a Story or a defect. Only one slight problem with this. That is the Test Cycle results shown when viewing the issue could be results from a previous version. Traceability between execution Version and Jira issue (e.g. Story or Defect) is not clear. Probably not a problem for most teams though.

This capability allows you to track both individual cases and cycles against a user story. Useful in many scenarios. Say you have a regression pack in a cycle to run and a handful of focused tests you need to run against the user story. This is the way to do it.

At this point then, we have tests and we have cycles. If we want to build things up with another level then we have the Test Plan entity.

A plan contains one or more cycles. A cycle contains one or more test cases. A plan can only contain cycles. A plan can not contain individual tests. This is different to a lot of tools where it's the plan that contains the tests. Semantics at the end of the day though. I like this structure. It's simple and scalable.

Test Plans in the ZSC world are optional. If you want you can work with Cycles only. You link those cycles to the different issue types (e.g. Stories and Defects) to give you your test coverage. Or you could stick to cases and link those to the different issue types.

There is another place where ZSC Test Plans can be useful. This is if you're following the traditional waterfall approach of documenting the release. Remember the old IEEE 829 Test Plan standard? Yes, you can document things like Scope, Resources, Environments, etc here. What a Plan is really used for though is to group one or more Cycles.

In short Plans allow you to document, group your Cycles and add supporting material (e.g. attachments). No more than a container sitting at the top of the tree. When you view the actual Plan there's only a limited amount of information. A list of the test cycles and their status. At a fundamental level that's all you're interested in. However, a nice touch would be a bit of reporting direct from the test plan. Or perhaps the ability to customize the data displayed around Cycle execution. Where the Plan comes into it's own is as a container for focusing your reporting. We'll talk more about this in a moment.

Execution works at either the individual test level or at the cycle level. Execute the individual tests directly from a user story or go off and run a complete cycle. Not only does this duel approach provide flexibility but it works rather well too.

If you have a test case linked to a user story you can execute that test from within the user story. As part of that execution you can link to the environment you're running against. Make sure it's assigned correctly though. The execution workflow is so simple that it makes this really effective.

When it comes to executing the cycles things are as straightforward. Select your cycle from the list and you jump straight to the “Test Player". Step through the test cases and the individual steps within each case. Update the actual results and add attachments as you go.

There's support for all the standard report types that you'd expect. Reports that cover development of tests, assignment/execution and traceability. Currently, at the time of writing, there is a switch over from a legacy format to a new reporting format. Whilst you can use both it feels a bit 'clunky' swapping between the different types. The new report types currently only cover Execution and Traceability. Even so still a lot of reports to work with. With the new reports there's still 22 execution reports and 4 traceability reports.

The configuration for each report is well supported. You can report across projects and filter based on criteria like Versions. You select to display the 'Last test execution' only or pick 'all test executions'. One significant limitation. You can't save all the report criteria you define. You run the report based on a set of criteria. If you want to run that same report later, you need to remember the criteria you specified. Would be nice to be able to save the criteria.

Reports are generated quickly and displayed clearly in the new reporting engine. There are little touches that impress. There is the ability to configure an execution report to use either 'Last Test Execution' or 'All Test Executions'. That 'Last Test Execution' option is useful. You can aggregate test results across different versions/releases. Then you get an overall test status that's often difficult to collate with many tools.

Jira gadgets are we'll supported. You can add various gadgets to your dashboards. Criteria for each gadget can be specified in the same way you specify for reports. There's also a neat free application to pull your reports from ZSC into Confluence. This gives you the capability to share your test reports. Share them with the rest of your project. Or merge them into other project documentation stored in Confluence.

For those of you that are reporting junkies there's also integration with EasyBI for Jira too. You can pull all your testing data in to EasyBI. This is powerful and gives you the power to expand. Only a few tools out there deliver this capability.

Several useful features adorn the test automation component of ZSC. The first is the Jenkins plugin that allows you to pull out the BDD test cases from ZSC directly into Jenkins. It also allows you to push the test results back to Zephyr scale.

There's also a well specified Rest API. Bit strange that it's defined with RAML and not Swagger. Swagger being a big part of SmartBear the estate. Having said that ZSC is a fairly recent addition to the SmartBear estate. I don't expect it to be long before this API definition is swapped from RAML to Swagger. That's a minor point though. This Rest API has all the methods you'd need if you were integrating into your own automation suite. Nothing lacking here.

What I like about ZSC is the navigation simplicity. Flick between Tests, Plans, Cycles and Reports with ease. The useful pop up dialogue boxes that seem to anticipate your next move. Links provided to the next step in your workflow. A lot of thought has gone into this.

The act of planning your tests is not particularly well supported at the moment. You can organise your testing by cycles. Yet, it's not possible to schedule when you want to execute the tests in those cycles. I'm guessing that's work in progress because the new 'Burndown' reports will need that data to work.

Another potential enhancement for the future is workflow. Currently there's no ability to enforce workflow status changes. You can define your own 'status' values for test cases (e.g. Draft, In Review, etc). Just that there's nothing controlling or enforcing the progression through those status values. Not a big issue but could be important for some teams.

Don't let those two points put you off though. This is a well thought out test management solution. Lots of features that are comparable to the enterprise solutions out there. Well integrated with Jira, simple enough for beginners to use and plenty of advanced features. Advanced features that will allow you to scale (hence the name I guess). Overall ZSC does what it says on the tin. “A full-featured test management solution that seamlessly integrates with Jira". A solution that feels like it's been cleverly woven into Jira.

Xray pitches it's self as …

“Cutting Edge Test Management for Jira".

Which doesn't actually tell me a lot. As you start to dig deeper you'll find statements like 'Unifying Testing and Development', 'Organising complex tests' and 'Integration with DevOps'. We like the sound of that, so lets see if XRay lives up to the expectations.![]()

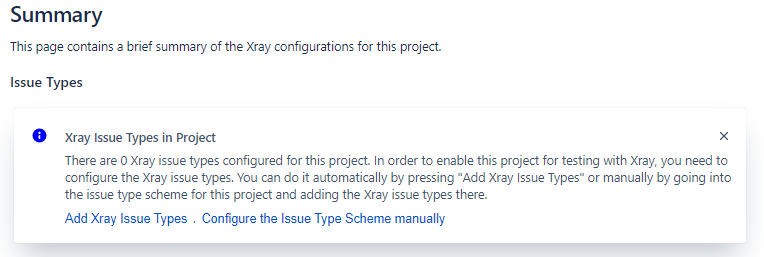

The first thing that impresses with Xray is the well organised and helpful start page…

Configure

Configure

Like the other Jira test management add-ins you configure XRay for the project you want to use it on. The process for doing this, following straight on from the start page, allows you to do this with one click. All clearly explained and configured for your project in seconds.

First Impressions Count – and I counted a lot here.

What hits you once you've configured the issue types (easy to do) is that there are so many settings and options. Sticking to the defaults will get you started. Yet there's a lot here that will allow you customize your install as you progress. You will need to setup the Issue Types that you want to cover with tests. This is a simple drag and drop to tell Xray that you want, for example, 'Stories' to be covered by tests. It's worth flicking through the settings at the start. Just don't get too distracted by knobs and levers. Sticking with the defaults will get you going.

As you go through the configuration you'll come across a section for Layouts. They've made the test layout infinitely configurable. A nice touch which most tools ignore. There's always so much information to squeeze in and display for a test case record. Often you're left with a very cluttered display. The ability to configure easily makes life easier for the end users.

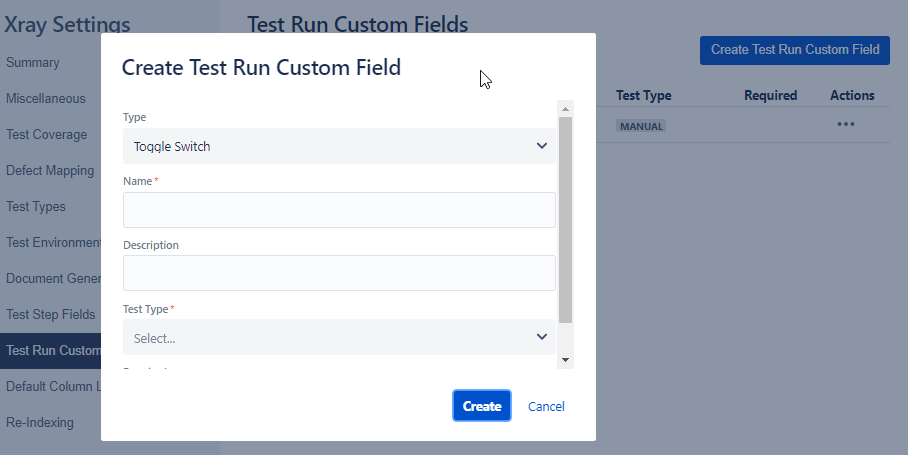

Custom Fields

Custom Fields

You'll also find custom fields are configurable for Test steps and Test Results. Everything from Toggle switch to Multi Select lists. Custom field types for test results are configurable by the individual types of tests (e.g. manual or BDD). If you need to add custom fields at the test case level that's done with the core Jira functionality. Everything you need is covered either within Jira or as part of the XRay add in.

You have your setup defined. Now we need to understand the project structures XRay allows you to work with in Jira. There are several very configurable ways to structure your test projects.

https://docs.getxray.app/display/XRAY32/Project+Organization

1. Everything in one project – all your stories, defects, tests, test plans, etc are in one Jira project.

2. Separate Development and Test Projects – user stories, defects, etc in one project. Tests, test plans, etc in a completely separate Jira project.

3. Separate Development, Test Case and Test Execution Projects – user stories, defects, etc in one project. Tests and Test Sets in their own project. Test plans and Test Execution in their own Jira projects too.

4. Separate Requirements, Defects, Tests and Execution Projects – User stories/requirements in their own project. Defects and bugs in their own project. Tests and Test Sets in their own project. Test plans and Test Exeuction in their own Jira projects too.

1 and 2 are the more common ways to structure your projects. We'll talk about pros and cons of each approach in a bit. This gives a lot of flexibility. Flexibility you should experiment with. You need to select the right approach that's right for your projects.

So you've decided on the structure you want to use. Next you'll need to understand XRay's workflow and entities. XRay sticks to a very clear and familiar format (we like familiar).

– Tests

– Pre-conditions

– Test Sets

– Test Plans

– Test Executions

– Sub-Test Executions

From your project 'Testing Board' you start with your Test in the Repository. Obviously (we like 'obvious') the Repository is the container for all your test cases. Then we have the Test Plan which acts as the container for a group of tests. From the Testing Board menu this would seem to be it. No where else to go.

Only two options on the Testing Board menu to select from: Repository or Plans. I have to admit I find this a little confusing as there's so much more to XRay (Sets, Pre-conditions, etc). In general though the workflow you'll follow is…

1. Create Tests (in the Repository)

2. Group Tests by Sets (create issue of type = Test Set)

3. Define Pre-conditions (create issue of type = Pre-condition)

4. Create Plan

You'd think that the test entities (like Sets, Pre-conditions, Executions, etc) would be accessible from the 'Testing Board' menu. They're not. Only a minor gripe as you'll start to find your way round fast enough. I expected everything in one place on that 'Testing board'.

Anyway, create some sets and pre-conditions (as you would normal Jira issues). Then all the magic starts to happen when you drill down into the Plans. When you do drill down into a Plan you'll find you can add Tests or Test Sets to your plan. Once you've added them you can kick off an execution.

Pretty straightforward workflow and concepts that are easy for any tester to pick up. So let's look at each entity in a little bit more detail then.

You create tests in the test repository. You start out with a familiar folder structure that gives you warm cosy feeling right from the start. It's familiar and easy to grasp. Then you create your first test the usual Jira way. Click the big blue 'Create' button and select an issue type of 'XRay Test'. As you create the test you can link it to a User Story. This simple step defines your coverage using the standard Jira Linked Issue option.

Now this could be me being pedantic. Although more likely it's the way XRay tries to conform to the way Jira works. Just that it never feels like it's easy to add tests. You're constrained by entering a test as standard Jira issue. You can define only the basic Jira info at the start. Then you have to navigate to the issue to enter all the test steps. Seems a bit clumsy. All you want to do is add all the test case details in one go. Not fiddle around navigating all the time.

Once you've added the test case record, you then need to populate the details. Open up the test case record and you have all the steps, preconditions, sets, plans and runs sections. It's all there! Creating steps is a doddle. Rich text format means you have a lot of scope for crafting the detail. Coupled with a pop out grid view for entering steps there's nothing holding you back here. It's a clean and tidy user experience.

When editing steps there's the ability to import steps. Several options, including json, csv and clipboard. What's useful though is the ability to import from other tests. Makes it easy to build out test steps based on other steps you've already written. Be aware though that this isn't a 'call to test' kind of feature. Say you update the steps in the original test case. XRay won't automatically update the steps in the new test case. A bit limited but granted this is an advanced feature that most teams can live without.

You can define your tests as the usual 'Manual' test type where you write out the test steps. Alternatively you can set your test case as a 'Generic' or 'Cucumber' type test. You want to be very careful when you change the type. You'll lose whatever you've written under your original test type when you change it. Generic tests are, as the user guide says, “neither Manual nor Cucumber". They are simply a test with a description that you can track a result against. You could use them for tracking exploratory test sessions. Or, as discussed later, use them for tracking automated cases. Cucumber, as the name suggests, allows you to define the content in the Gherkin, Given/When/Then syntax. So the capability is there to link this in with BDD/automation efforts if required.

A few other points on tests then. Attachments are well supported, both at the test step level and the test case level. You can create sub tasks for tests which can be useful. There's also the ability to create 'pre-conditions' for tests. This means you can define a set of pre-conditions and link them to one or more test cases. Makes it easy to define setup steps that might be common across a range of different cases.

Next then we need to work out how test cases fit in with Sets, Plans and Runs.

A Test Set is another Jira issue type (which helps for searching, reporting, etc). It's easy to add a test to a set (from the test case view) or add many test cases to a set (from the set view). It's a many-to-many relationship as you'd expect. So you're covered when building out sets directly when viewing the issue.

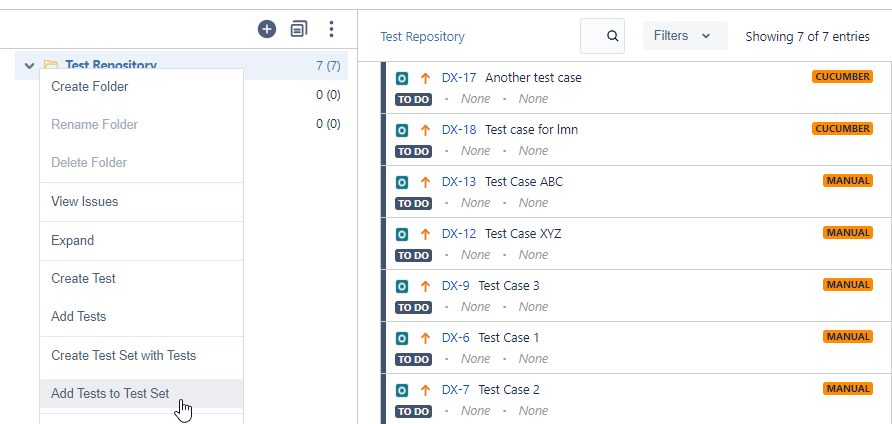

Create Test Set From Repository

Create Test Set From Repository

From the testing board area you can also view your tests in the folder view and then add them to sets from there. It's a much more visual and intuitive approach to building out sets. Right click on a folder of tests and select 'Create Test Set with Tests'. Easy.

There's not a lot to Sets. As you'd expect it's a grouping mechanism. They're simple to work with. With Sets and the Test Repository (folder view) you have two ways to organise things. You can either use one and not the other. Or you can use both in conjunction. Depends on how clever and complex you want to get.

Either way Sets and the Repository help you manage the process of adding a group of tests to a plan. A plan is where we bring everything together as part of a distinct test execution effort. It's from the plan that we then go on to the execution phase.

So you create a test plan. Guess what? It's another Jira issue type. You can view your test plan as you would view any other Jira issue or you have the XRay Testing Board view. The Testing Board view is where you get a nice graphical view of everything.

Bit of a limitation from this view though. That is you can't add sets to your plan from this view. You can add tests in this view, not test sets. You have to view the Jira issue in it's own right to add tests from a test set.

Not a huge limitation mind you. It's easy to flip between the two views. From this view you can easily add one or more test sets and then flip to the board view.

One nice thing about the test plans is that they are a Jira issue (of type 'Test Plan'). That means you can define Jira workflows and add the test plans to that workflow. Perhaps you have a complicated review processes for example. So you define that process as a workflow and link the 'Test Plan' issue type to that workflow.

If you've defined your Environments then you can link the Plan to an environment. It's either one environment or all. You can't link to multiple environments. Not that this is a limitation. You get to initiating the execution. Then you can pick a selection of multiple environments to run against then.

Once the plan is in place though you're in a position to create an execution. Which is basically the same thing as a test run. What's nice here is that you can create an execution with tests in a certain status. Great if you need to re-test with failed tests from previous runs.

Once that execution is in play you can link it to your sprint. The execution of that plan then shows up on your sprint board. From there you can drag it across the board through In Progress to Done. Makes it simple to see what test plans you're executing as part of a sprint.

Back to the execution though. What does that look like and how effective is it?

The execution side of things is where XRay excels. Create that execution from the test plan, pull the execution onto your board and away you go. From the test execution you can click on the individual tests and then cycle through each test in turn.

Once you start on that execution you'll see test case execution page. Here you have your custom fields, a list of all activity, comments, links to defects and screen shots (evidence). And of course a list of all the test steps you need to follow.

With XRay you have both an execution issue and then a separate execution view (like a test runner view). As always there's a lot of data/fields to squeeze on to the execution view. XRay have done a nice job of keeping this neat and tidy. It's easy to navigate, easy to enter data, easy to link to defects and easy to add screen shots. During execution you'll want to create defects. When you do a table of the test steps is automatically added to the defect description. This'll save you a lot of grief entering steps to reproduce. Nice touch.

It's a pretty straightforward relationship. A Test Plan can have many executions. When viewing the execution it's easy to jump between the plan, execution and test cases. When you're executing the test steps the layout is well structured, clear and easy to use. You can add attachments to the test steps (e.g. Evidence). It would be nice if you could drag and drop files rather than go through the upload file process though.

One smart feature is the ability to import execution results. This gives you the ability to work outside of Jira when running tests. Then feed in all the results back in when you're ready. Useful if you have a 3rd party running your tests that doesn't have access to your instance of Jira.

What also works well is that you can add tests during the execution. Of course tests added do not get added to other executions that are already in progress. There doesn't seem to be a way to add those new tests directly to the original test plan at the same time though. You may want to add a new test case during the execution and update the test plan. If you do you have to add the test case twice (once to the execution and then to the plan). Not a huge issue but could become a little irritating over time. I mean it's it's not unheard of to want to update a test plan as you're executing it!

You have 20 testers running tests against your system. Tests passing. Tests failing. Raising defects all over the place. How do you know where you are with your test effort? Well you have the usual range of coverage, traceability and execution reports.

The reports do the job but there's not a huge range and they aren't particularly configurable. For example when looking at coverage you can't group by Environment. You can filter and show one environment. Trouble is you can't show the results grouped on one report for all environments.

One unique aspect of XRay reporting is that you can exclude results that are “non-final". If a test case is being run several times (say for different releases of the system) you can exclude the results for these non-final statuses. You'd be excluding the results for tests that are in a ToDo or Executing state. The previous pass or fail results for the same test case would then take precedence. Quite a neat solution to the complexity of test management reporting. You are only picking up the results that you're interested in as part of executing a test plan.

There's only a limited number of reports. If you need power reporting then you could explore the Jira plugin EasyBI. This popular reporting tool for Jira has support for XRay. EasyBI is likely to deliver everything you're ever likely to need on the reporting front.

https://eazybi.com/blog/xray-jira-test-management-reports-with-eazybi

If you have external automation tools/systems you'll probably want to plug them into XRay. This can give you visibility over the automation execution and reporting. The usual suspects like Jenkins and Bamboo could be setup to fulfil this role. There is a plugin for Jenkins that allows you to pull in a JUnit project. You can execute the tests and then push the test results to XRay.

If you're looking to build your own integration then the REST API is well supported. When I say well supported I mean you have API calls for everything you're ever likely to need. This includes support for custom fields and attachments. Keep this following point in mind though. As with other Jira plug-ins, for some API calls you'll need to use the Jira API (for example adding attachments at the test case level). Other API calls you'll need to use the XRay API (adding attachments at the step level). All looks like it's built on Swagger so quite easy to go to the API user guide and start trying things out.

I mentioned earlier about this 'Generic' test type in XRay. If you want your automation framework to create new test records on demand then you'd do this as a Generic test. Perhaps you have an automation environment that isn't based on Cucumber or BDD. You need to execute the tests, create a new test record in XRay and report the test results. The Generic type is the way to go here. Perhaps this “neither Manual nor Cucumber" type of test isn't so odd after all?

There's enough, well thought out, functionality to support your automation requirements in XRay.

I like XRay. It's a well thought out tool that's well constructed. The user interface looks good although navigation takes a while to get used to. Simple enough to use but with plenty of advanced features to make the tool suitable for power users. No apparent support for test case version control. Yet smart enough under the bonnet to track the specific edits and executions of a test case. You have all the history and traceability your team is likely to need.

Reporting delivers what most teams will need but I wouldn't call it extensive. You can take things to another level, way ahead of most other tools, if you link in with EasyBI reporting for Jira. Good support for a range of custom fields. Attachments supported at the test case and step level. And traceability for tracking what you've tested. It's all there and all put together in a structure that'll be familiar to most testers. A package that'll work for most teams.

Zephyr for Jira (ZFJ) is marketed as …

“A flexible test management solution inside Jira, perfect for Agile teams focusing on Test Design, Execution, and Test Automation"

On the Atlassian market place home page they promote these 3 key aspects: …

I wasn't quite sure what “single-project" meant or how ZFJ would bring all these facets together. As we go through this review we'll find answers to both of these points.

To start with ZFJ follows a pretty standard structure. Implementation based around with the standard relationship between the different entities. You create test cases, you group them into test cycles. A cycle is then executed so that you have a set of test results for each test case within a cycle. A cycle only has one set of tests/results associated with it. If you need to run again you create a new cycle and associate the same set of tests with that cycle.

There's no concept, or entity, called a test plan in ZFJ. You can't group entities into a test plan and report at a test plan level. In ZFJ you need to think of a project having 1 or more cycles. You then group or organise those cycles by versions. That is the versions you define for your Jira project. So each Version can have one or more cycles. You then report on the test cycles executed for that version. If you want you could also assign cycles to Jira components so that you get even more granularity.

That's the high level structure then. Cases grouped by cycle and each cycle has a set of results associated with it. A test case can then exist in one or more cycles. In each cycle that test case has it's own isolated result value.

There's no test case version control in ZFJ though. You might run a test case in one cycle and pass it. Then you change that test case for a future cycle. That modification is then picked up by all the previous executions. It's a simple model and will be effective for many teams.

Let's look at the individual components in a little more detail then.

As you'd expect, you create your tests in Jira as just another issue type. So your Jira Queries with 'issuetype=Test' will work fine. As you start to create and view these tests you can create/view the usual artefacts. Artefacts like attachments, links and sub tasks. One thing that is surprising.

The handling of attachments is better (more Jira like) than ZSC and XRay. As you would expect you can view attachments directly from within ZFJ (unlike ZSC). Subtasks is a nice Jira feature that's baked into Tests. Links are all very Jira like to, giving you the option to define the type of link (e.g. 'Relates to'). These features are standard in built Jira features though. What we want is the stuff that's specific to managing and defining our tests.

You add steps quickly with “Tabbing" to create new steps. It's so easy to build up your test steps. Unlike Zephyr Scale (ZSC) though there's no ability to call out to other test cases/steps. No ability to copy in from other test cases. Actually there is a way but it's not built in as you write the steps. That's a big limitation in my book. Having said that you can add attachments to steps, which you can't do easily in ZSC.

There are two views though. Steps “Lite" and Steps “Detail". This didn't seem to do a lot mind you, other that show or hide the attachments column. Apparently you can add custom fields to test steps. The 'Test' issue type implemented in ZFJ is a standard Jira issue type. This means that the custom fields are implemented as standard Jira custom fields.

What we can do then is add the meta data, details and executions to our test case. When we add the detailed steps we then have the ability to Execute or Add a test to a cycle. This execute capability let's us run a test in isolation, outside of a test cycle. Then we have the details section. This gives us the usual steps feature.

ZFJ doesn't have version control on the test cases. For many teams that won't be an issue. In fact it'll be a benefit for some as it reduces complexity and helps with usability. Yet, there are other teams where version control will be essential. As a team grows in maturity I'd say it's a feature that should be a core part of a test management tool.

There's a new feature that you can enable to let you configure BDD/Cucumber scenarios in your test cases. Once enabled you get the 'Create a BDD Feature' menu item. Click the link and you get the standard Jira Create Issue screen for creating a new User Story. You'll notice this issue has a label 'BDD feature'. What's that all about?

All becomes a bit clearer when you then view/edit the issue you've created. Once you've created the Story you can add one or more BDD scenarios to that Story. Adding a scenario then creates individual test cases that contain the scenarios. You're entering many scenarios directly from the Story.

It's a bit of a strange process that's not consistent with the how you get used to using Jira. And it doesn't conform to the usual approach of having a 'type' field you select on the test case. It does work though and I like the concept. You want a Story to track the task of creating the BDD scenarios. That Story enables you to create the linked test cases that contain the BDD details. Quite a nice approach once you've worked it out.

One other big point with ZFJ is that there's no folders. No way to group tests into folders. You can order them in folders as part of your executions (see cycles below). Just can't organise your repository of tests in folders. Pretty much every other tool out there allows you to group tests into folders. If you're migrating from other legacy tools this might be something you can't live without. You can label the test cases. You can search for them with the standard Jira search features. Thing is this soon becomes restrictive if you have large numbers of records to deal with.

On to the test cycles part of things then. Creating a new test cycle – simple to do – view the Cycle Summary and then click the 'Create New Test Cycle' button. When you create the cycle you can link it to the version you define for your Jira project. You can also define the Build and Environment for the cycle. These build and environment values seem to be free text. Strange as I'm not sure what value they'll bring when it comes to reporting and searching. We'll see about that as we move through this process.

So we've created the cycle, now we add the tests. Very easy to add them. You can add individually, from a search filter or straight copy from another cycle. One neat feature, when copying from a previous cycle, is that you can select the execution status too. You select all the tests from cycle XYZ where the tests are in a 'Fail' state. That makes life easy for re-tests.

Unlike Zephyr Scale you can't add tests from another Jira project. That's a feature that would be nice to have. Not a complete blocker but might be a bit restrictive. Now I understand when they say that ZFJ is a “Flexible, single-project test management" tool.

Cycles are not implemented as a Jira issue type. The result of this is that you can't search using JQL or the standard Jira search capability. You can view your test cycles based on the Version of the product. For example you search using the version you define within your Jira project. You can add folders to the Cycles view which helps keep things organised. The same test case can be added to many folders within a cycle. This way you can use folders to categorise on the basis of different environments. Or to categorise based on some other coverage entity.

The cycles have been well woven into the Jira way of managing versions. Version being either unreleased or released. Your cycles then broken down and linked to either released or unreleased versions. Makes it very easy to manage test execution against the version of a product under test.

When it comes to execution you'll find the 'E' for execute button within the cycles area. Or from the Zephyr menu you can search for executions. From here you can multi select executions too. When you multi select you can associate cases to defects, copy to another cycle or move. You can even update the test case status (pass, fail, etc) from here.

Everything is where you need it when it comes to the execution of the tests in the cycle. Step through each test easily with the back and forward buttons. Update the results for each step. Add defects at the test case or step level. Update custom fields. It's all there. Well I say that but I didn't find a bulk step update feature. A minor point but going to be something you'll miss if you have a lot of steps you want to update in one go.

Cycles and executions are not implemented as a Jira issue type. The consequence of this is that you can't search using JQL or the standard Jira search capability. Not particularly restrictive as there are ample search filters built in to ZFJ. Filters you can save and run on demand to see what needs to be executed. A range of pre-defined filters make it easy to see which tests are assigned to you for execution.

Adding attachments as execution evidence simple too. A simple ctrl+v to paste the an image into the execution record. This works well when you're using your standard screen capture tools. You can also add those attachments at the execution level or the step level if you want to.

Tracking of results can undertaken at the test case level and the test step level. Both operate independently. You can set all the steps to fail and then pass the test if you want too. There's no automatic roll up feature here. You can set all the steps to fail and then set the test result to pass if you want too.

Also worth mentioning that there's no place to track time taken for execution. You could do this with a custom field. Problem is that this won't make it easy to roll up and get totals for execution across a complete test cycle. I've never seen a team use this sort of feature effectively so I'm not convinced you're losing much here.

On the reporting front you've the usual summary reports and dashboard. Actually the summary is more dashboard than report. Well thought out though. Clear visibility of the tests assigned to different product versions. Break downs by components and labels. High level statistics that every test manager will find essential.

Then we have “Test Metrics". A range of graphs showing things like execution progress and status over period of time. Executions by cycle and executions by tester thrown in for good measure too. There's even a detailed list of the test cycles. You'll want to filter by version so that you're seeing the specific metrics for a release you're working on. With this filter your execution cycles are shown with all the relevant status info you need. It's pretty much the 'Test Plan' view that I thought was missing in ZFJ.

Then there's the traceability matrix report. Lots of detail here. You can select the issue type (e.g. 'Story') and then see the test cases assigned/linked to that story. Just what you'd expect. Those stories then show the tests linked to them. Drill down further to see the individual test results along with any defects that are linked. What's useful here is that the status of those defects is shown too.

If I'm being pedantic this isn't so much reporting. More dashboards. You can't configure a set of report parameters and then run that report when required. It's a range of dashboard displays. Very good dashboards mind you.

Now we come to integration with automation tools. Support for Cucumber, Selenium, JUnit, TestNG, SoapUI, UFT, EggPlant, and Tricentis Tosca. Bit strange that TestComplete isn't listed there seeing as that's a SmartBear product. I'm sure support for TestComplete isn't far off though. Anyway, two ways to work with this. You can manually upload execution results (not very automated!). Or deploy a tool called a Zbot. A Zbot watches for results files and then uploads the results in the background.

If that approach doesn't work for you then there's the Rest API. Create the API through the Jira front end. Then send REST requests directly to ZFJ. You'll find the documentation in Zpiary. From here you can even experiment with those api requests. Looking through the API definitions you'll have access to all the core features you need. There's executions, cycles, tests, traceability, attachments, etc.

Then we have the BDD/Cucumber component. Here we create a standard test case (jira issue type of test) with a label of 'BDD Feature'. You create a dedicated BDD story in Jira/ZFJ. Then add one or more BDD scenarios to that story. Once created you can download the BDD files from ZFJ or even using the API.

Finally we have the integration to Bamboo and/or Jenkins. This allows you to push results from automated tests run under a CI tool and pull ZFJ into that CI workflow. You'll need to add the plugins to your CI tool (either Jenkins or Bamboo). Not sure I'd call this a feature rich capability but it is there if you need it. If you want to see the benefits of Continuous Testing this is a feature you'll want to make the most of.

On the whole another competent test management tool for Jira. Not quite the same level of features as Zephyr Scale in my opinion. As with any test management tool there's a lot of data/fields to squeeze into a single display panel. The ZFJ user interface looks a little unpolished if I'm honest.

You also need to accept the basic entity relationship model that's implemented in ZFJ. It's a simple test, cycle and execution model. Update a test case and that update is reflected in older executions. That'll be a big draw back for many teams. There's no version control capability of tests. Version control of tests does lead to a whole world of complexity.

Adding attachments at the test step level, are well implemented. Integration with the core Jira Version and Component entities works well. Building on the core Jira 'Related to' capability, when it comes to traceability is clever. This all reduces complexity in the user interface.

The dashboards work well and deliver all the core information you'd expect. There's no real concept of creating a report based on a set of report criteria. Again a simple model, that avoids creating a lot of complexity. This will fit well with many teams.

A couple of other points to consider. You can't use test cases across different projects. You can't call out to other test cases within a test. Limitations yes, but off set by reduced complexity and an easy to use feel. If you're looking for a simple approach to test management ZFJ works.